( function ( exports, require, module, _filename, _dirname ) Īha! It turns out that if we set process.

Let's do a quick refresher first: in Node.js every module and required file is wrapped in a so called module wrapper. This is how you could do it in the browser but how does this work in Node.js? This brings to me learning of the weekend. in Frontend land // index.html // foo.js const currentScript = document. the issue of accessing module meta information like what the script current element is. This causes the child process data events handlers to be triggered on the main process.stdout stream, making the script output the result right away.I was reading the ta proposal for JavaScript over the weekend. const ) īecause of the stdio: 'inherit' option above, when we execute the code, the child process inherits the main process stdin, stdout, and stderr.

Nodejs process code#

For example, here’s code to spawn a new process that will execute the pwd command. The spawn function launches a command in a new process and we can use it to pass that command any arguments. We’re going to see the differences between these four functions and when to use each. There are four different ways to create a child process in Node: spawn(), fork(), exec(), and execFile().

Nodejs process windows#

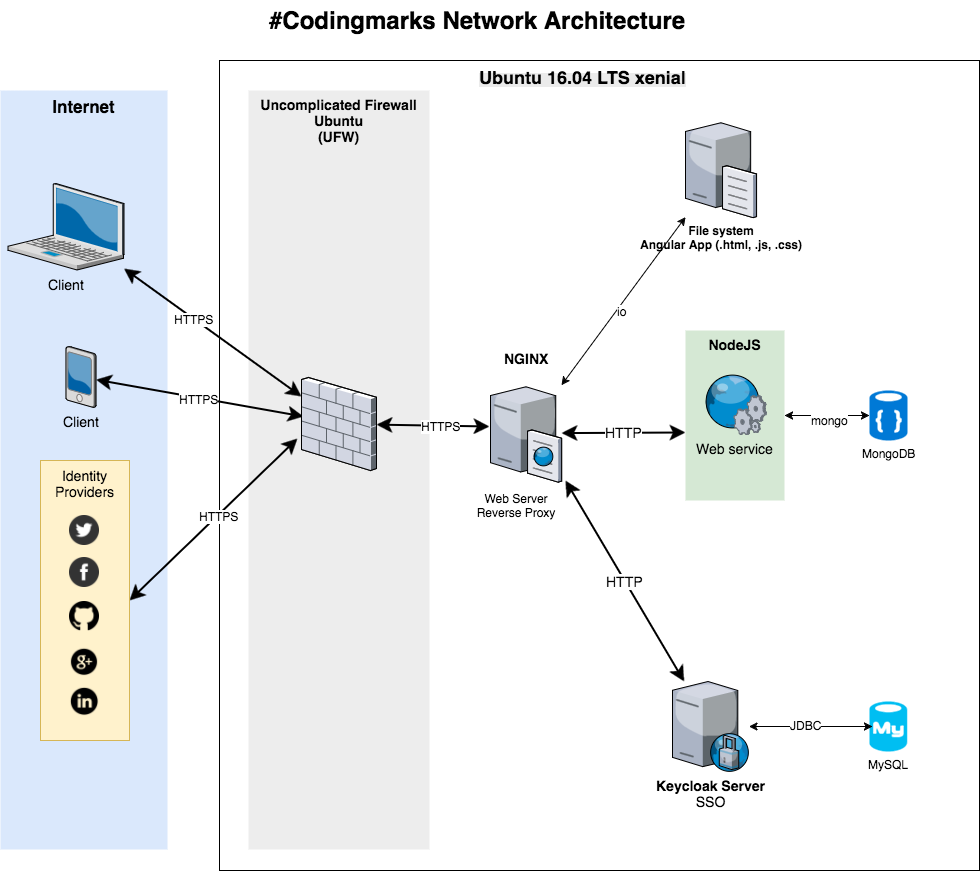

On Windows, you need to switch the commands I use with their Windows alternatives. Note that examples I’ll be using in this article are all Linux-based. We can, for example, pipe the output of one command as the input to another (just like we do in Linux) as all inputs and outputs of these commands can be presented to us using Node.js streams. We can also control the arguments to be passed to the underlying OS command, and we can do whatever we want with that command’s output. We can control that child process input stream, and listen to its output stream. The child_process module enables us to access Operating System functionalities by running any system command inside a, well, child process.

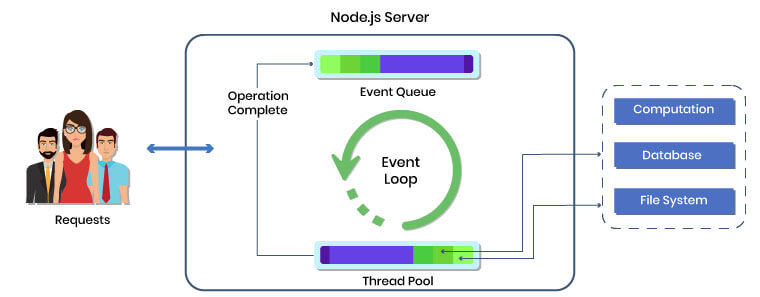

We can easily spin a child process using Node’s child_process module and those child processes can easily communicate with each other with a messaging system. If you haven’t already, I recommend that you read these two other articles before you read this one: Please note that you’ll need a good understanding of Node.js events and streams before you read this article. I cover similar content in video format there. This article is a write-up of part of my Pluralsight course about Node.js. Scalability is baked into the platform and it’s not something you start thinking about later in the lifetime of an application. Node.js is designed for building distributed applications with many nodes. Using multiple processes is the best way to scale a Node application. The fact that Node.js runs in a single thread does not mean that we can’t take advantage of multiple processes and, of course, multiple machines as well. No matter how powerful your server may be, a single thread can only support a limited load. But eventually, one process in one CPU is not going to be enough to handle the increasing workload of your application. Single-threaded, non-blocking performance in Node.js works great for a single process.

0 kommentar(er)

0 kommentar(er)